kubernetes之kube-scheduler的设计

Kubernetes 是 k8s 核心组件之一,主要目的即为pod选取合适的node进行绑定。整体流程氛围三部分:

- 获取未调度的

podList - 通过执行一系列的调度算法进行选取合适的

node - 提交数据到

apiServer然后进行bind1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36For given pod:

+---------------------------------------------+

| Schedulable nodes: |

| |

| +--------+ +--------+ +--------+ |

| | node 1 | | node 2 | | node 3 | |

| +--------+ +--------+ +--------+ |

| |

+-------------------+-------------------------+

|

|

v

+-------------------+-------------------------+

Pred. filters: node 3 doesn't have enough resource

+-------------------+-------------------------+

|

|

v

+-------------------+-------------------------+

| remaining nodes: |

| +--------+ +--------+ |

| | node 1 | | node 2 | |

| +--------+ +--------+ |

| |

+-------------------+-------------------------+

|

|

v

+-------------------+-------------------------+

Priority function: node 1: p=2

node 2: p=5

+-------------------+-------------------------+

|

|

v

select max{node priority} = node 2

主要流程分析

cmd/kube-scheduler/app/server.go

1 | Run: func(cmd *cobra.Command, args []string) { |

命令行参数解析 及创建 scheduler

cmd/kube-scheduler/app/server.go#runCommand

1 | cc, sched, err := Setup(ctx, opts, registryOptions...) |

cmd/kube-scheduler/app/server.go#Setup

1 | // 命令行及 options 参数解析 |

内置调度算法注册

pkg/scheduler/scheduler.go#New()

1 | case source.Provider != nil: |

pkg/scheduler/factory.go#createFromProvider()

1 | func (c *Configurator) createFromProvider(providerName string) (*Scheduler, error) { |

pkg/scheduler/algorithmprovider/registry.go#getDefaultConfig()

1 | func getDefaultConfig() *schedulerapi.Plugins { |

Run

默认参数定义在k8s.io/kubernetes/pkg/scheduler/apis/config/v1alpha1/defaults.go,通过执行run方法启动主逻辑。Run方法主要做了以下工作:

- 初始化 scheduler 对象

- 启动 kube-scheduler server,kube-scheduler 监听 10251 和 10259 端口,10251 端口不需要认证,可以获取 healthz metrics 等信息,10259 为安全端口,需要认证

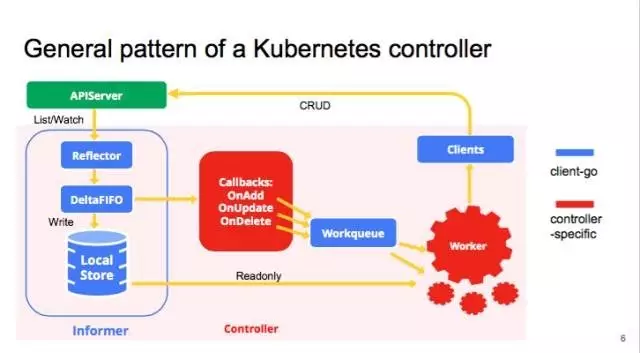

- 启动所有的 informer

- 执行

sched.Run()方法,执行主调度逻辑kubernetes/cmd/kube-scheduler/app/server.go1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71// Run 主逻辑

func Run(ctx context.Context, cc *schedulerserverconfig.CompletedConfig, sched *scheduler.Scheduler) error {

.....

if cz, err := configz.New("componentconfig"); err == nil {

cz.Set(cc.ComponentConfig)

} else {

return fmt.Errorf("unable to register configz: %s", err)

}

// 事件广播准备

cc.EventBroadcaster.StartRecordingToSink(ctx.Done())

// 配置健康检查

var checks []healthz.HealthChecker

if cc.ComponentConfig.LeaderElection.LeaderElect {

checks = append(checks, cc.LeaderElection.WatchDog)

}

// 启动健康检查服务

if cc.InsecureServing != nil {

separateMetrics := cc.InsecureMetricsServing != nil

handler := buildHandlerChain(newHealthzHandler(&cc.ComponentConfig, separateMetrics, checks...), nil, nil)

if err := cc.InsecureServing.Serve(handler, 0, ctx.Done()); err != nil {

return fmt.Errorf("failed to start healthz server: %v", err)

}

}

if cc.InsecureMetricsServing != nil {

handler := buildHandlerChain(newMetricsHandler(&cc.ComponentConfig), nil, nil)

if err := cc.InsecureMetricsServing.Serve(handler, 0, ctx.Done()); err != nil {

return fmt.Errorf("failed to start metrics server: %v", err)

}

}

if cc.SecureServing != nil {

handler := buildHandlerChain(newHealthzHandler(&cc.ComponentConfig, false, checks...), cc.Authentication.Authenticator, cc.Authorization.Authorizer)

// TODO: handle stoppedCh returned by c.SecureServing.Serve

if _, err := cc.SecureServing.Serve(handler, 0, ctx.Done()); err != nil {

// fail early for secure handlers, removing the old error loop from above

return fmt.Errorf("failed to start secure server: %v", err)

}

}

// 启动所有的Informer

go cc.PodInformer.Informer().Run(ctx.Done())

cc.InformerFactory.Start(ctx.Done())

// 调度之前等待缓存同步

cc.InformerFactory.WaitForCacheSync(ctx.Done())

// 如果开启了 leader 选取开关,进行 leader 选举

if cc.LeaderElection != nil {

cc.LeaderElection.Callbacks = leaderelection.LeaderCallbacks{

OnStartedLeading: sched.Run,

OnStoppedLeading: func() {

klog.Fatalf("leaderelection lost")

},

}

leaderElector, err := leaderelection.NewLeaderElector(*cc.LeaderElection)

if err != nil {

return fmt.Errorf("couldn't create leader elector: %v", err)

}

leaderElector.Run(ctx)

return fmt.Errorf("lost lease")

}

// 执行 run 方法

sched.Run(ctx)

return fmt.Errorf("finished without leader elect")

}

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 kirago杂谈!